Exploring the Frontiers of Artificial Intelligence: From Theory to Reality

Introduction

In the ever-evolving landscape of technology, one concept has captured our collective imagination and sparked endless discussions: Artificial Intelligence (AI). From its theoretical origins to its practical applications, AI has transformed from a futuristic dream to a present-day reality, shaping industries and our daily lives in profound ways.

The term “Artificial Intelligence” was coined at the Dartmouth Conference in 1956, where researchers gathered to discuss the possibility of creating machines that could simulate human intelligence. This conference marked the beginning of the formal study of AI as a field of research.

The Genesis of AI

The journey of AI began with the ambitious goal of creating machines capable of mimicking human intelligence. The term “artificial intelligence” was coined in 1956, marking the birth of this field. Early pioneers like Alan Turing laid the groundwork by introducing

The concept of a machine that could simulate any human’s thought process. Turing’s famous test, known as the Turing Test,

AI’s progression can be understood in terms of its capabilities. Narrow or Weak AI involves systems designed to perform specific tasks, such as image recognition, language translation, or playing games like chess and Go. AI, or Artificial Intelligence, refers to the simulation of human intelligence processes by machines, particularly computer systems. This can usually involve human intelligence through algorithms and systems, Such as understanding natural language, recognizing patterns, solving complex problems, and learning from experience.These systems excel in their dedicated domains but lack broader understanding. . Achieving General AI remains a complex challenge, as it requires the development of adaptable reasoning, self-awareness, and understanding context—a goal that still lies ahead.

AI can be broadly categorized into two main types:

- Narrow or Weak AI: This type of AI is designed and trained for a specific task. It excels in that specific task but lacks general intelligence. Examples include virtual personal assistants like Siri, recommendation systems used by streaming services, and image recognition systems.

- General or Strong AI: This is the theoretical concept of AI that possesses human-like cognitive abilities. It can understand, learn, and apply knowledge across a wide range of tasks, just like a human. Strong AI has the ability to perform any intellectual task that a human being can do.

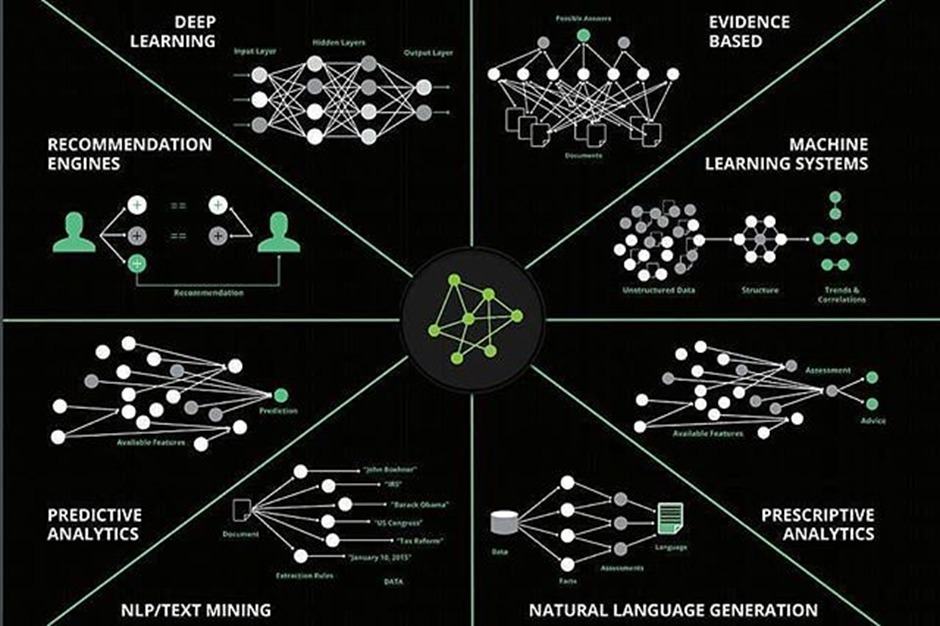

AI’s Building Blocks: Machine Learning and Deep Learning

Machine learning and deep learning are both subfields of artificial intelligence (AI) that focus on enabling computers to learn and make decisions or predictions without being explicitly programmed for each specific task.Machine Learning (ML) is a crucial subset of AI, where algorithms allow computers to learn patterns from data. Deep Learning, a subset of ML, involves neural A specialized form of machine learning that uses neural networks with multiple layers to model complex patterns in data. Deep learning has been particularly successful in tasks such as image and speech recognition.networks inspired by the human brain’s structure.These networks have enabled groundbreaking achievements, such as image and speech recognition, natural language processing, and even autonomous . It includes techniques like supervised learning, unsupervised learning, and reinforcement learning.

Real-world Applications:

AI’s impact is visible in diverse industries. In healthcare, AI aids in medical diagnoses and drug discovery, leveraging its ability to analyze vast amounts of medical data. Financial institutions utilize AI for fraud detection, risk assessment, and algorithmic trading. AI-powered virtual assistants like Siri and Alexa have become part of our daily lives, understanding and responding to our queries. Smart cities utilize AI for traffic management, energy optimization, and pu.blic safety. The entertainment industry employs AI for content recommendation and generation, enriching user experiences.

As AI advances, ethical questions emerge. The issue of bias in AI systems, reflecting the biases present in training data, raises concerns about fairness. The potential impact of AI on employment, privacy, and security is also subject to debate. Striking a balance between innovation and responsible use is essential to ensure AI benefits society as a whole.

Future Horizons:

The journey of AI is far from over. Ongoing research explores the boundaries of AI’s capabilities, aiming to bridge the gap between Narrow and General AI. Quantum computing holds the promise of accelerating AI tasks by processing vast amounts of data more efficiently. Interdisciplinary collaborations with neuroscience could provide insights into replicating human cognitive processes.

- Advanced Machine Learning Models: AI will likely see the development of more sophisticated machine learning models, potentially surpassing the capabilities of GPT-3.5. This could lead to better natural language understanding, more accurate image and video analysis, and enhanced decision-making systems.

- AI Ethics and Bias Mitigation: As AI systems become more integrated into our lives, there will be an increasing focus on addressing biases and ethical concerns. Research and development in fairness, transparency, and accountability will be crucial to ensure that AI benefits all of humanity without causing harm.

- AI in Healthcare: AI applications in healthcare could become more prevalent, aiding in disease diagnosis, drug discovery, personalized treatment plans, and remote patient monitoring. This could potentially lead to more efficient and accurate medical practices.

- Autonomous Systems: Progress in AI and robotics might lead to further advancements in autonomous vehicles, drones, and even robotic assistants for tasks like household chores or caregiving.

- AI-Augmented Creativity: AI tools could become more integrated into creative processes, assisting artists, writers, designers, and musicians in generating ideas, content, and designs.

- Natural Language Understanding and Interaction: Conversational AI could become more seamless and capable of understanding context and emotions, leading to more meaningful interactions between humans and machines.

- AI in Business and Industry: Industries such as manufacturing, agriculture, finance, and customer service could benefit from AI-driven optimizations, predictive analytics, and automation.

- AI and Climate Change: AI could play a role in addressing climate change by analyzing complex environmental data, optimizing energy consumption, and aiding in sustainable practices.

- Collaboration Between Humans and AI: The future might see AI systems becoming more like collaborators, working alongside humans to enhance productivity and creativity rather than replacing human jobs.

- Regulation and Policy Development: Governments and organizations will likely develop regulations and policies to ensure the responsible and safe use of AI technologies, covering areas like data privacy, algorithmic transparency, and liability.

- Quantum Computing and AI: The synergy between quantum computing and AI could lead to breakthroughs in solving complex problems and optimizing AI algorithms.

Artificial Intelligence has evolved from a theoretical concept to a technological force that shapes our world. With applications spanning industries and disciplines, AI continues to reshape our understanding of what’s possible. As we move forward, the ethical considerations and collaborative efforts will determine how AI contributes to a more innovative, equitable, and interconnected future. So, whether you’re fascinated by the theoretical foundations or excited about the tangible applications, AI remains an enthralling journey into the potential of human ingenuity and machine capabilities.